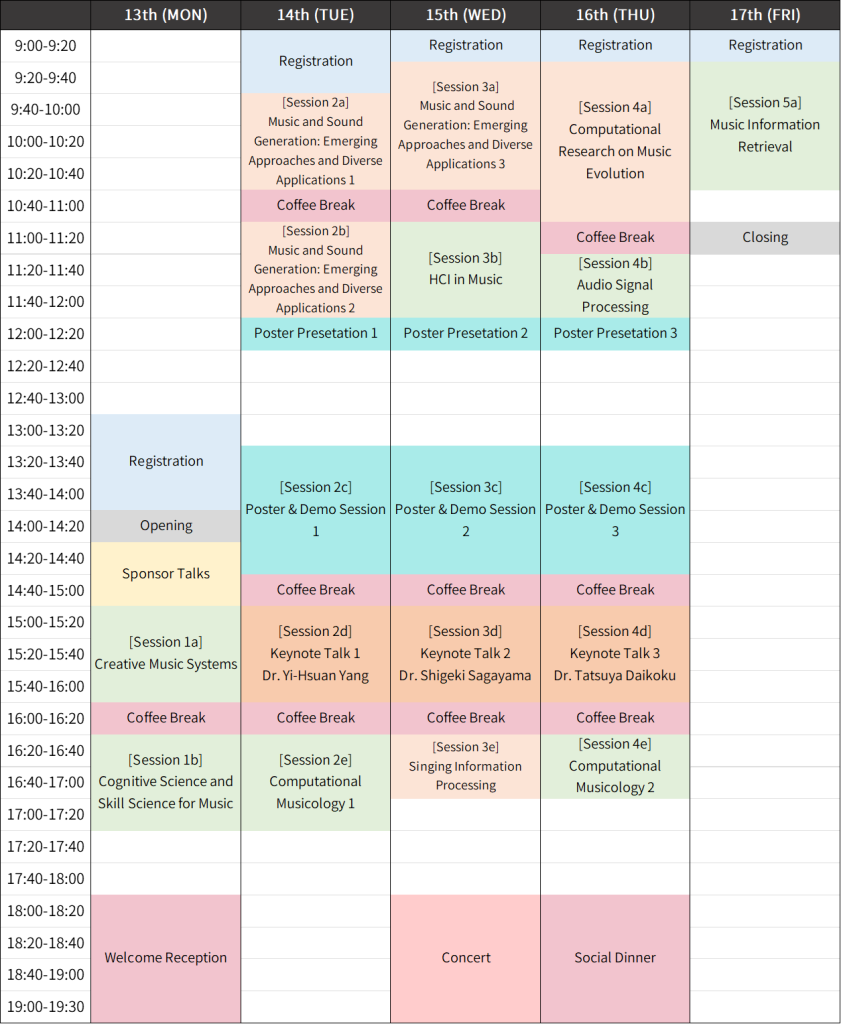

Scientific Program

Check out the proceedings HERE!

The time allotted for oral presentations is 20 minutes (15 minutes for presentation and 5 minutes for Q&A).

13th (Mon)

14:00-14:20 Opening

14:20-15:00 Sponsor Talks

15:00-16:00 Session 1a: Creative Music Systems

Chair: Tatsunori Hirai

[1a-1] Controllable Automatic Melody Composition Model across Pitch/Stress-accent Languages [pdf]

Takuya Takahashi, Shigeki Sagayama and Toru Nakashika

[1a-2] Design of a music recognition, encoding, and transcription online tool [pdf]

David Rizo, Jorge Calvo-Zaragoza, Juan C. Martínez-Sevilla, Adrián Roselló and Eliseo Fuentes-Martínez

[1a-3] Verse Generation by Reverse Generation Considering Rhyme and Answer in Japanese Rap Battles [pdf]

Ryota Mibayashi, Takehiro Yamamoto, Kosetsu Tsukuda, Kento Watanabe, Tomoyasu Nakano, Masataka Goto and Hiroaki Ohshima

16:20-17:20 Session 1b: Cognitive Science and Skill Science for Music

Chair: Sølvi Ystad

[1b-1] Combining Vision and EMG-Based Hand Tracking for Extended Reality Musical Instruments [pdf]

Max Graf and Mathieu Barthet

[1b-2] Emotional Impact of Source Localization in Music Using Machine Learning and EEG: a proof-of-concept study [pdf]

Timothy Schmele, Eleonora De Filippi, Arijit Nandi, Alexandre Pereda Baños and Adan Garriga

[1b-3] Exploring Patterns of Skill Gain and Loss on Long-term Training and Non-training in Rhythm Game [pdf]

Ayane Sasaki, Mio Matsuura, Masaki Matsubara, Yoshinari Takegawa and Keiji Hirata

14th (Tue)

9:40-10:40 Session 2a: Special Session – Music and Sound Generation: Emerging Approaches and Diverse Applications 1

Chair: Taketo Akama

[2a-1] Benzaiten: A Non-expert-friendly Event of Automatic Melody Generation Contest [pdf]

Yoshitaka Tomiyama, Tetsuro Kitahara, Taro Masuda, Koki Kitaya, Yuya Matsumura, Ayari Takezawa, Tsuyoshi Odaira and Kanako Baba

[2a-2] Pitch Class and Octave-Based Pitch Embedding Training Strategies for Symbolic Music Generation [pdf]

Yuqiang Li, Shengchen Li and George Fazekas

[2a-3] VaryNote: A Method to Automatically Vary the Number of Notes in Symbolic Music [pdf]

Juan M. Huerta, Bo Liu and Peter Stone

11:00-12:00 Session 2b: [Special session] Music and Sound Generation: Emerging Approaches and Diverse Applications 2

Chair: Taketo Akama

[2b-1] ShredGP: Guitarist Style-Conditioned Tablature Generation with Transformers [pdf]

Pedro Sarmento, Adarsh Kumar, Dekun Xie, CJ Carr, Zack Zukowski and Mathieu Barthet

[2b-2] ProgGP: From GuitarPro Tablature Neural Generation To Progressive Metal Production [pdf]

Jackson Loth, Pedro Sarmento, CJ Carr, Zack Zukowski and Mathieu Barthet

[2b-3] Reconstructing Human Expressiveness in Piano Performances with a Transformer Network [pdf]

Jingjing Tang, Geraint Wiggins and György Fazekas

13:20-14:40 Session 2c: Poster & Demo Session 1

[Poster]

[2c-P1] Effective Textual Feedback in Musical Performance Education: A Quantitative Analysis Across Oboe, Piano, and Guitar [pdf]

Rina Kagawa, Nami Iino, Hideaki Takeda and Masaki Matsubara

[2c-P2] A Melody Input Support Interface by Presenting Subsequent Candidates based on a Connection Cost [pdf]

Tatsunori Hirai

[2c-P3] Phoneme-inspired playing technique representation and its alignment method for electric bass database [pdf]

Junya Koguchi and Masanori Morise

[2c-P4] An Audio-to-Audio Approach to Generate Bass Lines from Guitar’s Chord Backing [pdf]

Tomoo Kouzai and Tetsuro Kitahara

[2c-P5] Teaching Chorale Generation Model to Avoid Parallel Motions [pdf]

Eunjin Choi, Hyerin Kim, Juhan Nam and Dasaem Jeong

[2c-P6] DiffVel: Note-Level MIDI Velocity Estimation for Piano Performance by A Double Conditioned Diffusion Model [pdf]

Hyon Kim and Xavier Serra

[2c-P7] 8+8=4: Formalizing Time Units to Handle Symbolic Music Durations

Emmanouil Karystinaios, Francesco Foscarin, Florent Jacquemard, Masahiko Sakai, Satoshi Tojo and Gerhard Widmer [pdf]

[2c-P8] Soundscape4DEI as a Model for Multilayered Sonifications [pdf]

João Neves, Pedro Martins, F. Amílcar Cardoso, Jônatas Manzolli, Mariana Seiça and M. Zenha Rela

[Demo]

[2c-D1] AR-based Guitar Strumming Learning Support System that Provides Audio Feedback by Hand Tracking [pdf]

Kaito Abiki, Saizo Aoyagi, Akira Hattori, Ken Honda and Tatsunori Hirai

[2c-D2] The Demonstration of MVP Support System as an AR Realtime Pitch Feedback System [pdf]

Yasumasa Yamaguchi, Taku Kawada, Toru Nagahama and Tatsuya Horita

[2c-D3] Melody Reduction for Beginners’ Guitar Practice [pdf]

Hinata Segawa, Shunsuke Sakai and Tetsuro Kitahara

[2c-D4] Structural Analysis of Utterances during Guitar Instruction [pdf]

Nami Iino, Hiroya Miura, Hideaki Takeda, Masatoshi Hamanaka and Takuichi Nishimura

[2c-D5] Music in the Air: Creating Music from Practically Inaudible Ambient Sound [pdf]

Ji Won Yoon and Woon Seung Yeo

[2c-D6] Creating an interactive and accessible remote performance system with the Piano Machine [pdf]

Patricia Alessandrini, Constantin Basica and Prateek Verma

[2c-D7] A Singing Toolkit: Gestural Control of Voice Synthesis, Voice Samples and Live Voice [pdf]

D. H. Molina Villota, C. D’Alessandro, G. Locqueville, and T. Lucas

[2c-D8] Sonifying Players’ Positional Relation in Football [pdf]

Masaki Okuta and Tetsuro Kitahara

[2c-D9] Talking with Fish: an OpenCV Musical Installation [pdf]

Gabriel Zalles Ballivian

15:00-16:00 Session 2d: Keynote Talk 1

Chair: Keiji Hirata

Deep Learning-based Automatic Music Generation: An Overview

Yi-Hsuan Yang

16:20-17:20 Session 2e: Computational musicology 1

Chair: Noriko Otani

[2e-1] Interpretable Rule Learning and Evaluation of Early Twentieth-century Music Styles [pdf]

Christofer Julio, Feng-Hsu Lee and Li Su

[2e-2] Toward empirical analysis for stylistic expression in piano performance [pdf]

Yu-Fen Huang and Li Su

[2e-3] SANGEET: A XML based Open Dataset for Research in Hindustani Sangeet [pdf]

Chandan Misra and Swarup Chattopadhyay

15th (Wed)

9:20-10:40 Session 3a: Special Session – Music and Sound Generation: Emerging Approaches and Diverse Applications 3

Chair: Taketo Akama

[3a-1] JAZZVAR: A Dataset of Variations found within Solo Piano Performances of Jazz Standards for Music Overpainting [pdf]

Eleanor Row, Jingjing Tang and György Fazekas

[3a-2] A Live Performance Rule System informed by Irish Traditional Dance Music

Marco Amerotti, Steve Benford, Bob L. T. Sturm and Craig Vear [pdf]

[3a-3] VERSNIZ – Audiovisual Worldbuilding through Live Coding as a Performance Practice in the Metaverse [pdf]

Damian Dziwis

[3a-4]

11:00-12:00 Session 3b: HCI in Music

Chair: Mathieu Barthet

[3b-1] Networked performance as a space for collective creation and student engagement [pdf]

Hans Kretz

[3b-2] eLabOrate(D): An Exploration of Human/Machine Collaboration in a Telematic Deep Listening Context [pdf]

Rory Hoy and Doug Van Nort

[3b-3] Estimating Interaction Time in Music Notation Editors [pdf]

Matthias Nowakowski and Aristotelis Hadjakos

13:20-14:40 Session 3c: Poster & Demo Session 2

[Poster]

[3c-P1] Human-Swarm Interactive Music Systems: Design, Algorithms, Technologies, and Evaluation [pdf]

Pedro Lucas and Kyrre Glette

[3c-P2] Improving Instrumentality of Sound Collage Using CNMF Constraint Model [pdf]

Sora Miyaguchi, Naotoshi Osaka and Yusuke Ikeda

[3c-P3] Quantum Circuit Design using Genetic Algorithm for Melody Generation with Quantum Computing [pdf]

Tatsunori Hirai

[3c-P4] Automated Arrangements of Multi-Part Music for Sets of Monophonic Instruments [pdf]

Matthew McCloskey, Gabrielle Curcio, Amulya Badineni, Kevin McGrath, Georgios Papamichail and Dimitris Papamichail

[3c-P5] Automatic Orchestration of Piano Scores for Wind Bands with User-Specified Instrumentation [pdf]

Takuto Nabeoka, Eita Nakamura and Kazuyoshi Yoshii

[3c-P6] A quantitative evaluation of a musical performance support system utilizing a musical sophistication test battery [pdf]

Yasumasa Yamaguchi, Taku Kawada, Toru Nagahama and Tatsuya Horita

[3c-P7] SBERT-based Chord Progression Estimation from Lyrics Trained with Imbalanced Data [pdf]

Mastuti Puspitasari, Takuya Takahashi, Gen Hori, Shigeki Sagayama and Toru Nakashika

[3c-P8] PolyDDSP: A Lightweight and Polyphonic Differentiable Digital Signal Processing Library [pdf]

Tom Baker, Ricardo Climent and Ke Chen

[3c-P9] The Unfinder: Finding and reminding in electronic music Rikard Lindell and Henrik Frisk

*Moved to [4c-P9] on Thursday

[Demo]

[3c-D1] The Sound Morphing Toolbox: Musical Instrument Sound Modeling and Transformation Techniques [pdf]

Marcelo Caetano and Richard Kronland-Martinet

[3c-D2] Morphing of Drum Loop Sound Sources Using CNN-VAE [pdf]

Mizuki Kawahara, Tomoo Kouzai and Tetsuro Kitahara

[3c-D3] Generating Tablature of Polyphony Consisting of Melody and Bass Line [pdf]

Shunsuke Sakai, Hinata Segawa and Tetsuro Kitahara

[3c-D4] Development of an easily-usable smartphone application for recording instrumental sounds [pdf]

Takanori Horibe and Masanori Morise

[3c-D5] A Research on Music Generation by Deep-Learning including ornaments – A case study of world harp instruments – [pdf]

Arturo Alejandro Arzamendia Lopez, Akinori Ito and Koji Mikami

[3c-D6] Automatic Music Composition System to Enjoy Brewing Delicious Coffee [pdf]

Noriko Otani, So Hirawata and Daisuke Okabe

[3c-D7] Expressor: A Transformer Model for Expressive MIDI Performance [pdf]

Tolly Collins and Mathieu Barthet

[3c-D8] Real-Time Piano Accompaniment Using Kuramoto Model for Human-Like Synchronization [pdf]

Kit Armstrong, Ji-Xuan Huang, Tzu-Ching Hung, Jing-Heng Huang and Yi-Wen Liu

[3c-D9] Intuitive Control of Scraping and Rubbing Through Audio-tactile Synthesis [pdf]

Mitsuko Aramaki, Corentin Bernard, Richard Kronland-Martinet, Samuel Poirot and Sølvi Ystad

15:00-16:00 Session 3d: Keynote Talk 2

Chair: Keiji Hirata

17 Years with Automatic Music Composition System “Orpheus”

Shigeki Sagayama

16:20-17:00 Session 3e: Special Session – Singing Information Processing

Chair: Tomoyasu Nakano

[3e-1] Towards Potential Applications of Machine Learning in Computer-Assisted Vocal Training [pdf]

Antonia Stadler, Emilia Parada-Cabaleiro and Markus Schedl

[3e-2] Effects of Convolutional Autoencoder Bottleneck Width on StarGAN-based Singing Technique Conversion [pdf]

Tung-Cheng Su, Yung-Chuan Chang and Yi-Wen Liu

16th (Thu)

9:20-11:00 Session 4a: Special Session – Computational Research on Music Evolution

Chair: Eita Nakamura

[4a-1] Historical Changes of Modes and their Substructure Modeled as Pitch Distributions in Plainchant from the 1100s to the 1500s [pdf]

Eita Nakamura, Tim Eipert and Fabian C. Moss

[4a-2] Computational Analysis of Selection and Mutation Probabilities in the Evolution of Chord Progressions [pdf]

Eita Nakamura

[4a-3] A network approach to harmonic evolution and complexity in western classical music [pdf]

Marco Buongiorno Nardelli

[4a-4] On the Analysis of Voicing Novelty in Classical Piano Music [pdf]

Halla Kim and Juyong Park

[4a-5] Bipartite network analysis of the stylistic evolution of sample-based music [pdf]

Dongju Park and Juyong Park

11:20-12:00 Session 4b: Audio Signal Processing

Chair: Mitsuko Aramaki

[4b-1] Algorithms for Roughness Control Using Frequency Shifting and Attenuation of Partials in Audio [pdf]

Jeremy Hyrkas

[4b-2] Bridging the Rhythmic Gap: A User-Centric Approach to Beat Tracking in Challenging Music Signals [pdf]

António Sá Pinto and Gilberto Bernardes

13:20-14:40 Session 4c: Poster & Demo Session 3

[Poster]

[4c-P1] Creating a New Lullaby Using an Automatic Music Composition System in Collaboration with a Musician [pdf]

So Hirawata, Noriko Otani, Daisuke Okabe and Masayuki Numao

[4c-P2] Automatic Phrasing System for Expressive Performance Based on The Generative Theory of Tonal Music [pdf]

Madoka Goto, Masahiko Sakai and Satoshi Tojo

[4c-P3] NUFluteDB: Flute Sound Dataset with Appropriate and Inappropriate Blowing Styles [pdf]

Sai Oshita and Tetsuro Kitahara

[4c-P4] Melody Blending: A Review and an Experiment [pdf]

Stefano Kalonaris and Omer Gold

[4c-P5] Balancing Musical Co-Creativity: The Case Study of Mixboard, a Mashup Application for Novices [pdf]

Thomas Ottolin, Raghavasimhan Sankaranarayanan, Qinying Lei, Nitin Hugar and Gil Weinberg

[4c-P6] Global Prediction of Time-span Tree by Fill-in-the-blank Task [pdf]

Riku Takahashi, Risa Izu, Yoshinari Takegawa and Keiji Hirata

[4c-P7] Music Emotions in Solo Piano: Bridging the Gap Between Human Perception and Machine Learning [pdf]

Emilia Parada-Cabaleiro, Anton Batliner, Maximilian Schmitt, Björn Schuller and Markus Schedl

[4c-P8] Listeners’ Perceived Emotions in Human vs. Synthetic Performance of Rhythmically Complex Musical Excerpts [pdf]

Ève Poudrier, Bryan Jacob Bell, Jason Yin Hei Lee and Craig Stuart Sapp

[4c-P9] The Unfinder: Finding and reminding in electronic music [pdf]

Rikard Lindell and Henrik Frisk

[Demo]

[4c-D1] From jSymbolic 2 to 3: More Musical Features [pdf]

Cory McKay

[4c-D2] Comparing vocoders for automatic vocal tuning [pdf]

D. H. Molina Villota and C. D’Alessandro

[4c-D3] Music recognition, encoding, and transcription (MuRET) online tool demonstration [pdf]

David Rizo, Jorge Calvo-Zaragoza, Juan C. Martínez-Sevilla, Adrián Roselló, and Eliseo Fuentes-Martínez

[4c-D4] Microtonal Music Dataset v1 [pdf]

Tatsunori Hirai, Lamo Nagasaka and Takuya Kato

[4c-D5] Lighting Control based on Colors Associated with Lyrics at Bar Positions [pdf]

Shoyu Shinjo and Aiko Uemura

[4c-D6] Melody Changing Interfaces for Melodic Morphing [pdf]

Masatoshi Hamanaka

[4c-D7] Relative Representation of Time-Span Tree [pdf]

Risa Izu, Yoshinari Takegawa and Keiji Hirata

[4c-D8] Zero-Shot Music Retrieval For Japanese Manga [pdf]

Megha Sharma and Yoshimasa Tsuruoka

[4c-D9] Visualizing Musical Structure of House Music [pdf]

Justin Tomoya Wulf and Tetsuro Kitahara

15:00-16:00 Session 4d: Keynote Talk 3

Chair: Satoshi Tojo

Exploring the Neural and Computational Basis of Statistical Learning in the Brain to Unravel Musical Creativity and Cognitive Individuality

Tatsuya Daikoku

16:20-17:00 Session 4e: Computational Musicology 2

Chair: David Rizo Valero

[4e-1] deepGTTM-IV: Deep Learning Based Time-span Tree Analyzer of GTTM [pdf]

Masatoshi Hamanaka, Keiji Hirata and Satoshi Tojo

[4e-2] Music and Logic: a connection between two worlds [pdf]

Matteo Bizzarri

17th (Fri)

9:20-10:40 Session 5a: Music Information Retrieval

Chair: Ryo Nishikimi

[5a-1] A Novel Local Alignment-Based Approach to Motif Extraction in Polyphonic Music [pdf]

Tiange Zhu, Danny Diamond, James McDermott, Raphaël Fournier-S’niehotta, Mathieu Daquin and Philippe Rigaux

[5a-2] Predicting Audio Features of Background Music from Game Scenes [pdf]

Ryusei Hayashi and Tetsuro Kitahara

[5a-3] A Music Exploration Interface Based on Vocal Timbre and Pitch in Popular Music [pdf]

Tomoyasu Nakano, Momoka Sasaki, Mayuko Kishi, Masahiro Hamasaki, Masataka Goto and Yoshinori Hijikata

[5a-4] Exploring Diverse Sounds: Identifying Outliers in a Music Corpus [pdf]

Le Cai, Sam Ferguson, Gengfa Fang, and Hani Alshamrani